Building Ventures: the Builder's Handbook

Building Ventures: the Builder's Handbook is not just a book; it's a blueprint for revolutionizing the landscape of startup creation. This handbook dives into the art and science of systematically constructing successful businesses within the dynamic and demanding realms of venture builders.

Crafted for innovators, entrepreneurs, and visionaries, this handbook is a treasure trove of insights, strategies, and wisdom gleaned from the trenches of startup development. It's an essential guide for anyone involved in the intricate process of nurturing multiple startups, especially within specialized market verticals.

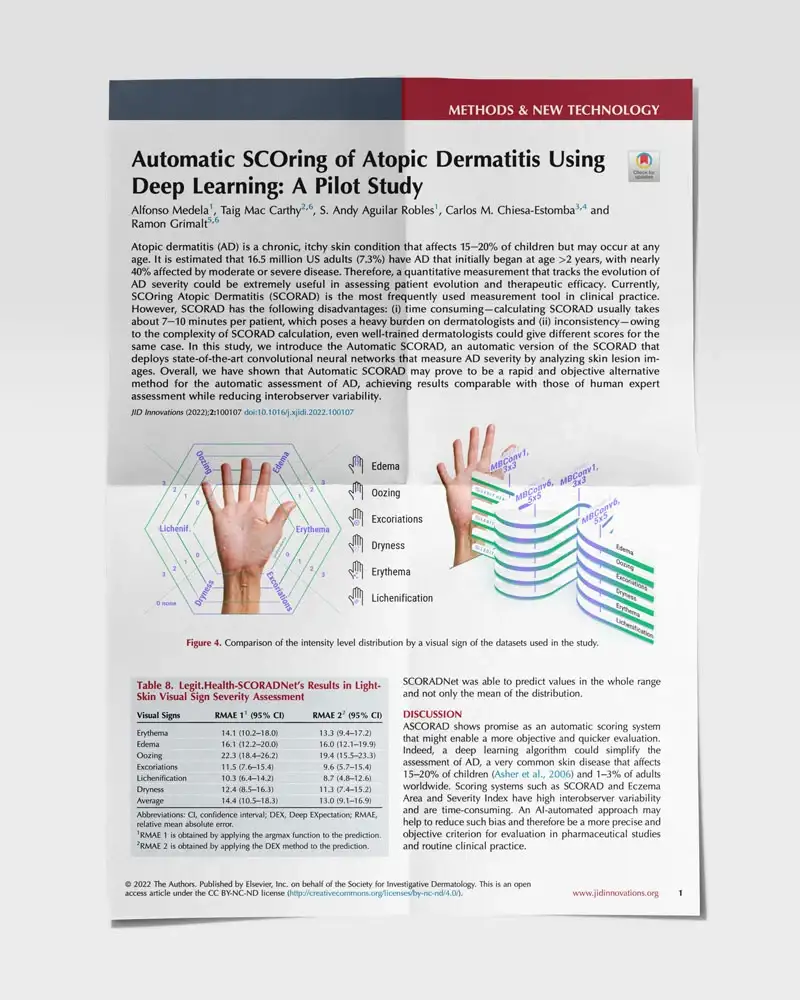

Learn moreAutomatic SCOring of Atopic Dermatitis using Deep Learning

In recent years, artificial intelligence (AI) has achieved human-expert-like performance in a wide variety of tasks such as skin cancer classification, detection, and lesion segmentation.

In this study, we introduce the Automatic SCORAD (ASCORAD), an automatic version of the SCORAD that provides a quick, accurate, and fully automated scoring method.

ASCORAD shows promise as an automatic scoring system that might enable a more objective and quicker evaluation. Indeed, a deep learning algorithm could simplify the assessment of AD, a very common skin disease that affects 15-20% of children (Asher et al., 2006) and 1-3% of adults worldwide.

Learn moreBecome part of a worldwide growth-hacking revolution

This innovative approach to viral marketing is revolutionizing how startups reach out to their potential clients and how companies launch products and run marketing strategies. Think like an entrepreneur. Write like a journalist. Launch like a boss.

The main idea behind News-Worth Dynamics is that all stories need to have something to make them newsworthy. Not all information is newsworthy. Stories need to supply certain elements of interest to qualify as news. These elements of interest form the Seven Traits of News-Worth.

With that in mind, we created a handy tool that we call the News-Worth Canvas: a visual template for describing, analyzing, and creating viral marketing.

Learn more👋 Contact me

Feel free to reach out through this form.